Twenty20 Systems Earns Gold in the Workato Automation League Partner Program for Exceptional Performance

April 18, 2024

Bridging the Gap: Replicating MuleSoft’s Scatter-Gather in Workato

September 30, 2024

Twenty20 Systems Earns Gold in the Workato Automation League Partner Program for Exceptional Performance

April 18, 2024

Bridging the Gap: Replicating MuleSoft’s Scatter-Gather in Workato

September 30, 2024

Using Workato to Migrate MuleSoft DataWeave Transformations

Introduction 📚

Transitioning from MuleSoft to Workato poses a significant challenge: replicating the robust data transformation capabilities inherent in MuleSoft’s DataWeave. DataWeave, a specialized language within MuleSoft, excels in transforming data across diverse formats seamlessly. This article delves into the intricate process of emulating complex DataWeave transformations within Workato, harnessing its SQL functionalities and expansive array of connectors.

As organizations increasingly seek agile and scalable solutions for data integration, understanding how to effectively migrate and adapt data transformation processes becomes paramount. By exploring Workato’s capabilities in depth, we uncover strategies to maintain and enhance data transformation workflows originally executed through DataWeave. Through this exploration, we examine pathways for achieving seamless and efficient data integration within the Workato ecosystem.

Use Case Overview 📝

In this scenario, we were tasked with migrating a complex data transformation process for an enterprise customer. This process involved working with data retrieved from Snowflake, a cloud-based data warehousing service, and DUCO, a platform used to consolidate, standardize, and reconcile diverse data types. The primary objective was to apply specific conditions to filter the payload effectively and then add static values to create an array of values.

The migration was crucial for the enterprise customer as it aimed to streamline their data processing workflow, enhance data accuracy, and improve overall operational efficiency. By leveraging the capabilities of Snowflake for data storage and DUCO for data reconciliation, the goal was to ensure a seamless, automated transformation process that could handle large volumes of data with precision.

This use case highlights the importance of integrating robust data management tools to achieve optimal performance and reliability in data-driven environments.

Original MuleSoft DataWeave Transformation 💡

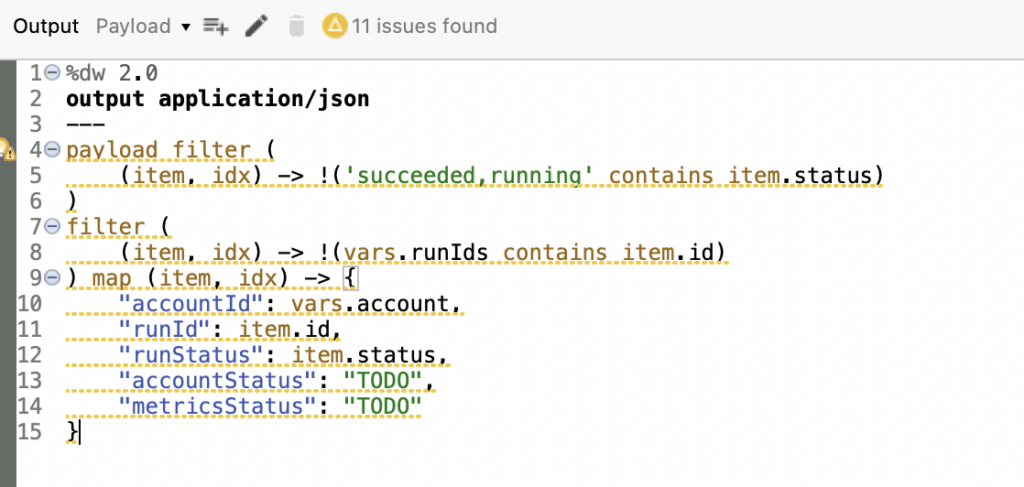

Here is the original DataWeave transformation used in MuleSoft:

This DataWeave script performs a critical transformation on the payload data. It involves two key filtering steps and a mapping process to create a refined output.

- Filtering the Payload: The initial filter (payload_filter) excludes items where the status is either 'succeeded' or 'running'. This ensures that only items with other statuses are processed further.

- Filtering by Run IDs: The second filter ensures that only items with runId present in the vars.runIds list are included. This step is crucial for isolating the relevant data entries for further processing.

- Mapping the Results: The final step maps each filtered item to a new structure. It extracts essential fields such as runId, accountId, and runStatus, while adding static placeholder values for accountStatus and metricsStatus.

Here's a detailed breakdown of the DataWeave script:

This transformation script is essential for the accurate and efficient processing of data within the MuleSoft environment. By filtering and mapping the data, it ensures that only the most relevant information is retained, which streamlines subsequent data handling and analysis tasks.

Migrating to Workato 🔄

To achieve the same results in Workato, we leveraged its robust SQL capabilities to filter and map the data. Here's a detailed step-by-step guide to the process:

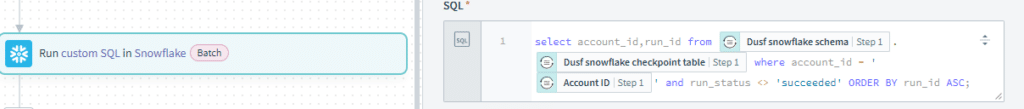

Step 1: Retrieve Data from Snowflake ❄️

The first step involves using Workato's Snowflake connector to execute a SQL query. This query retrieves the necessary data (vars.runIds) from the Snowflake database. The retrieved data forms the basis for subsequent filtering and mapping operations.

Here's how the Snowflake query is structured:

This query is designed to:

- Select Relevant Fields: It retrieves the account_id and run_id from the Snowflake schema.

- Filter Based on Account ID: It ensures that only the data corresponding to a specific account_id is selected.

- Exclude Certain Statuses: The query filters out records where the run_status is 'succeeded', ensuring only relevant data is processed.

- Order the Results: The data is ordered by run_id in ascending order, facilitating easier subsequent operations.

Using this approach, we efficiently pull the required data into Workato, setting the stage for the next steps in our transformation process.

Step 2: Fetch Data from DUCO 🌐

Next, we utilize the custom DUCO connector, developed by Twenty20 Systems, to retrieve runId and accountId. This connector enhances our data integration capabilities by seamlessly fetching and reconciling process runs from DUCO.

Using the DUCO connector, we configure the action to fetch process runs. This involves setting up the necessary parameters to ensure we retrieve the relevant runId and accountId values.

Key Configurations:

- Connector Setup: Ensure the DUCO connector is correctly authenticated and authorized to access the required data.

- Data Retrieval: Specify the criteria for the data fetch to ensure only relevant process runs are included. This typically involves defining filters based on specific conditions, such as status or date range.

By integrating data from DUCO, we enhance the dataset initially retrieved from Snowflake, ensuring that our transformation process has all the necessary information to proceed effectively. This step is crucial for creating a unified and accurate dataset, which will be used in subsequent transformation and mapping operations.

Step 3: Transform Data Using SQL Collection by Workato 🛠️

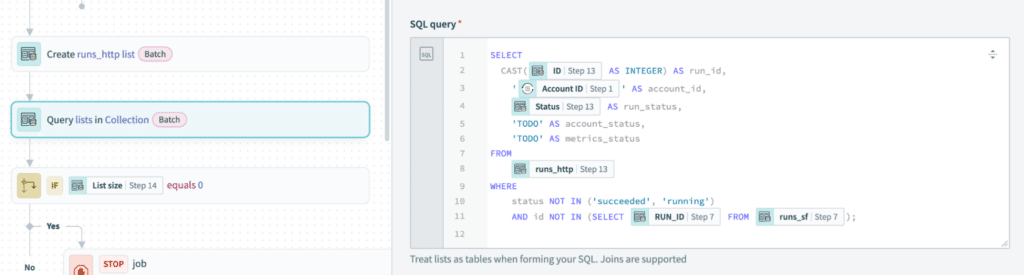

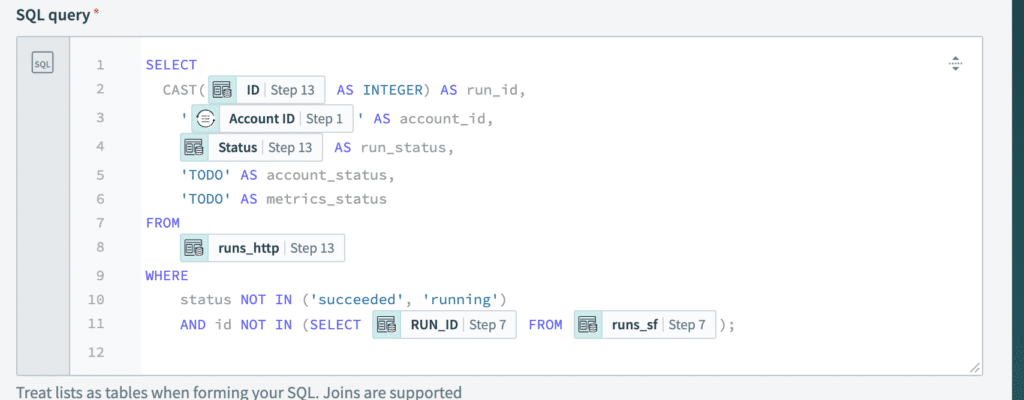

In the final phase of our migration process, we harness Workato’s advanced SQL capabilities to meticulously filter and map data according to the specific conditions outlined in our original DataWeave script.

Example SQL Query in Workato:

SQL Query inside Query lists in Collection action:

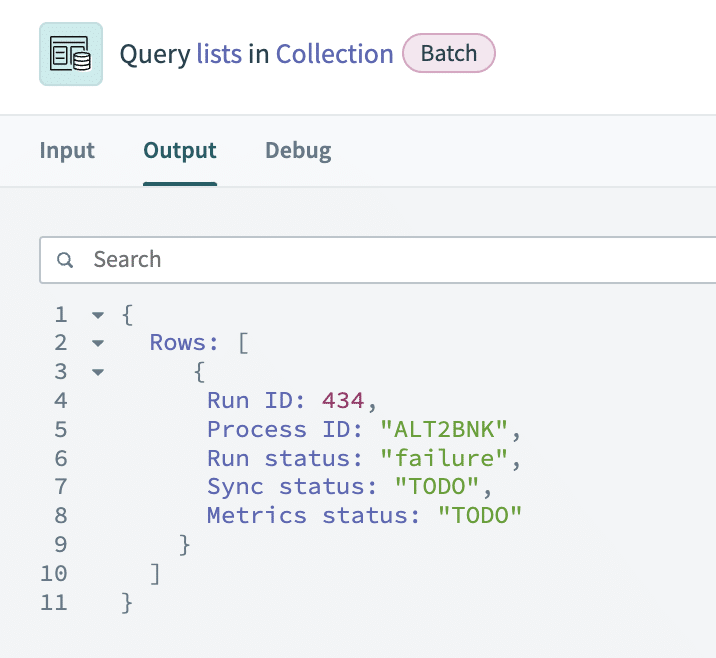

Output:

In this detailed example:

- Snowflake Query: This query retrieves runIds from the runs_http table in Snowflake, focusing on records where the status is not 'succeeded' or 'running'. This ensures that only relevant data, indicating failed or incomplete processes, is processed further.

- DUCO Call: Utilizing the Duco connector, we enhance our dataset by fetching essential data attributes such as runId and accountId. This supplementary data is crucial for comprehensive analysis and reporting.

- SQL Query: The SQL query plays a pivotal role in transforming the retrieved data. It casts the 'ID' field to an integer format as 'run_id' and assigns static values for 'account_status' and 'metrics_status'. This structured approach aligns closely with the data manipulation steps defined in our initial DataWeave transformation.

By leveraging Workato’s SQL capabilities in this manner, we ensure robust data filtering, mapping, and transformation processes. This not only facilitates seamless integration with existing systems but also enhances overall data accuracy and operational efficiency.

Conclusion ✅

Migrating DataWeave transformations to Workato involves leveraging Workato’s robust SQL capabilities and versatile connectors. By meticulously breaking down the transformation process into distinct phases—data retrieval, API integration, and SQL-based data manipulation—we effectively replicate the functionality of DataWeave. This methodical approach ensures a seamless migration while preserving the integrity and efficiency of the original transformation logic.

Workato, in partnership with Twenty20 Systems, stands out as a flexible and powerful platform for handling intricate data transformation tasks. Its comprehensive suite of tools and connectors empowers organizations to streamline integration workflows with ease. This article serves as a practical roadmap for developers embarking on similar migration projects, equipping them to fully harness Workato’s capabilities in optimizing data operations and enhancing overall efficiency.

In summary, transitioning from DataWeave to Workato meets the demands of modern data integration and unlocks new possibilities for scalable and agile data management solutions. By leveraging Workato, organizations can achieve greater operational agility and responsiveness in adapting to evolving business needs.

Ready to enhance your data transformation capabilities? Contact us today to explore how we can empower your organization to streamline workflows and optimize data operations.

About the Author

Vinay Kumar

Vinay brings a wealth of experience across various technologies to the Bangalore office. His "never give up" attitude makes him the go-to person for solving problems. A true team player, Vinay is admired for his positive outlook, willingness to help, and calm demeanor. When he's not working on challenging problems, Vinay finds adventure through traveling, trekking, and mentoring others. His passion for learning keeps him up-to-date by reading technical blogs and volunteering at events.