Twenty20 Systems Announces Strategic Partnership with Denodo

June 18, 2022

Twenty20 Systems Joins Mulesoft Partner Program

June 18, 2022

Twenty20 Systems Announces Strategic Partnership with Denodo

June 18, 2022

Twenty20 Systems Joins Mulesoft Partner Program

June 18, 2022

Large File Processing in Mule 4.0

Humans have generated more data in the past two years than the whole previous human history combined. It is estimated that there are 2.5 quintillion bytes of data created each day and it is increasing. Data is commonly shared using large files from source to target applications. These large data files need to be processed quickly and efficiently without errors. Failures in transferring and processing of large files have caused significant information loss to the business, causing outages and delays to mission critical applications.

Large file processing considerations:

Large file processing is a common use case across verticals. While processing large files, a number of factors need to be considered.

- File Size and format: The file size is the most important factor for large files processing. Increased file size means figuring out efficient ways to break down the files to load into memory and process them. The file format is also an important consideration. If the data is structured or semi-structured, breaking them down will be easier

- Volume: The volume of data is usually in GB or TB and needs to be carefully analyzed before processing them. The volume of data available to be processed

- Speed: We need to understand how quickly the data needs to be processed for the business to perform effectively. This will determine what type of software or hardware to use to process the files

- Error Handling: Data and processing errors can be a huge pain point when handling large files. The errors need to be handled delicately and determining when to break, stop or ignore an error is important

How to solve in Mule 4:

With all these considerations in mind, we have developed an efficient solution to process large files in Mule 4. Mule 4 allows streaming and processing large files with out of the box capabilities and it is easier than ever!

Consider this use case: A large file (1GB+) with customer data needs to be picked from a file location and sent over to Salesforce Marketing Cloud (SFMC). If one tries to read this file, perform basic transformations and attempt to send it to SFMC without any processing strategy, the Mule application will most definitely run out of memory and crash. In order to achieve this, we must use streaming and design the process in a way to process the data in “chunks” rather than the full file.

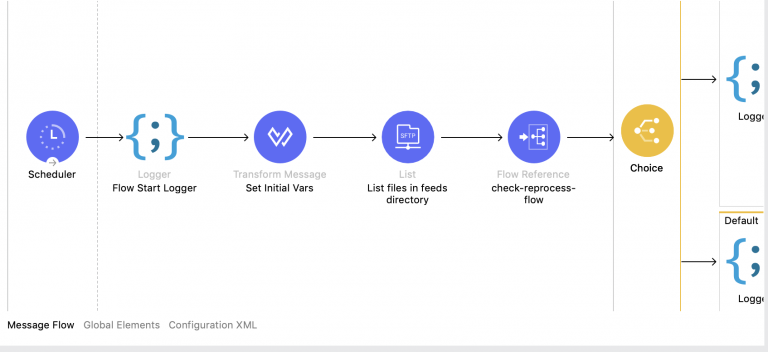

For the solution, I have used a simple Scheduler to trigger the flow. I initially had an “On New or Updated File” to trigger the flow, but once I deployed the application to CloudHub, I noticed the trigger would intermittently throw errors. In order to solve this, I added in a Fixed Frequency Scheduler, then listed the available files in the directory I was polling as the first step in the flow. This strategy worked as the flow would only continue if there were files to process.

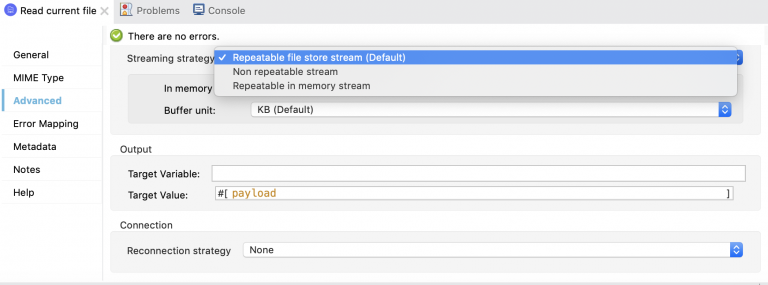

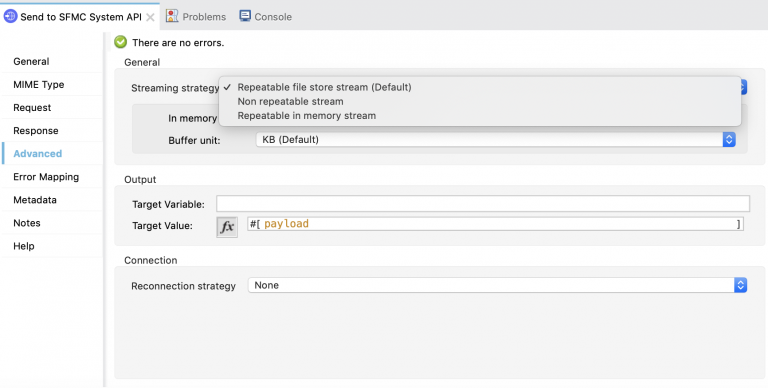

Once the files were ready to process, I read the files from the directory using a SFTP Read and chose the Repeatable file stream store option:

I have found depending on the use case, both the default Repeatable file store stream, which will stream the file and only keep a customizable chunk in memory and allows you to read the payload multiple times through the processing, and the Non repeatable stream, which will simply stream the file only once for you to do the processing, are the best options. Which option you choose will depend on individual cases. If you have logic prior to processing the file, you may want to use a non repeatable stream to trigger the event, save the file path and read the file when you are ready to do processing on the file data.

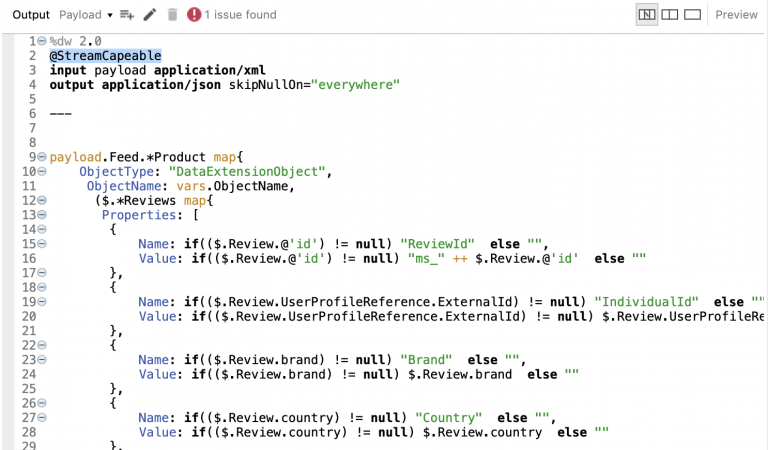

Once you are ready to process the file, your file is at this point in a stream. Once you trigger a transformation, the data is loaded in its entirety which can cause major issues trying to transform a large amount of data. There are several options to get around this however, if you must transform the data in its entirety, there is an option to stream the transformation as well, using @StreamCapeable in the transform:

This can bring a difference of being able to process 1GB of data in about a minute. We will discuss optimization a little later.

To then process this transformed data using an HTTP Request connector, depending on what the target system or System API will accept, we may not be able to send the data all at once. If you using the HTTP RQ object, there is an option to stream the data:

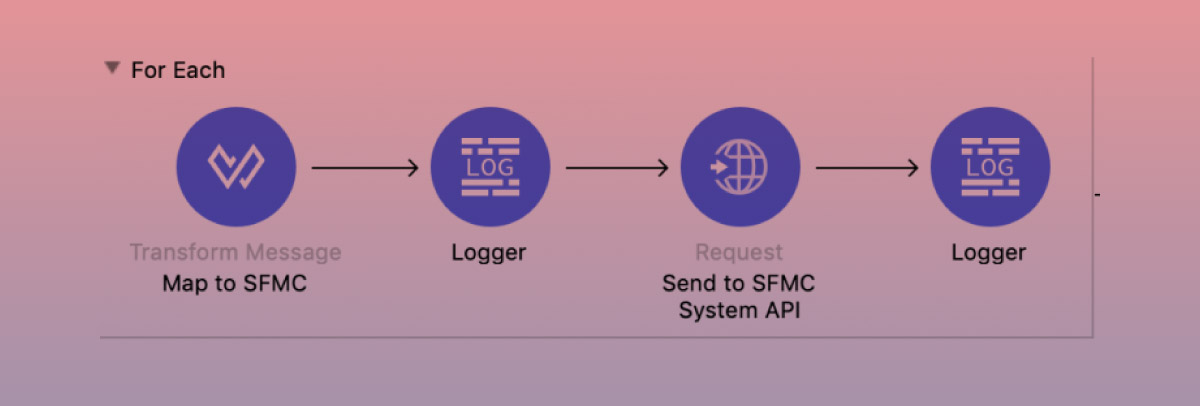

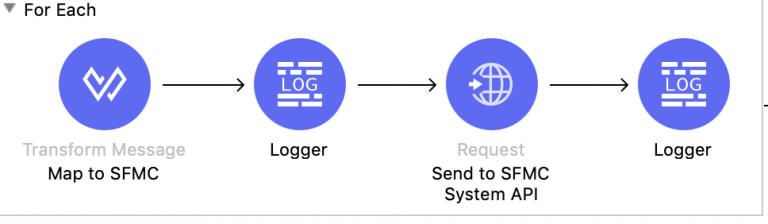

In addition, we must process the data in chunks by either using a “For Each” with an optimized collection size OR a Batch Process. I have found both to be useful, however, it depends on the use case. The magic of how fast and how efficiently the data is processed lies in the batch size or collection size.

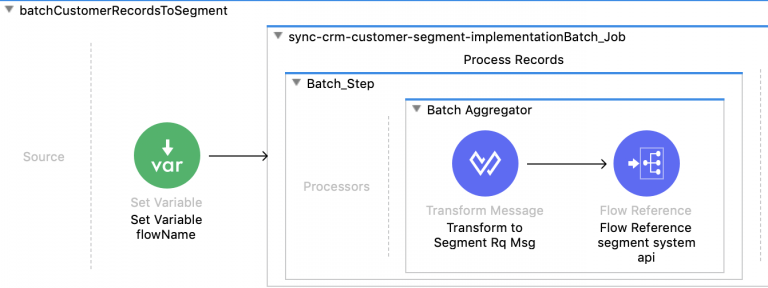

If you choose to use a Batch Process, I highly recommend putting the transformation inside the batch process if the use case allows it:

At the end, each case is different, depending on the use case, however, streaming in Mule 4 and processing large files has become much easier with out of the box capabilities in most of the connectors provided.

Further Optimization

- Decide on how much streaming buffer size for increased performance

- If using batch, decide on batch size

- If retrieving from a database, stream results and chunk the response max rows size

- When deploying to CloudHub, worker size and/or workers may need to be increased